How to Make an Artificial Intelligence System

- Sylvia Rose

- Mar 9, 2025

- 5 min read

Updated: Mar 15, 2025

Artificial Intelligence (AI) is a world revolution, virtually nonexistent in public use before the 21st century. Knowing how to make an AI gives insight to the workings of the system. AI creation follows certain steps.

Artificial intelligence enables machines to replicate intelligent human behaviors, or what it calculates human behavior to be, based on relevant data. Three necessary components are data, algorithms, and a technology stack.

Data

A model predicting house prices requires a dataset with relevant features like square footage, location, and recent sale prices. The type and amount of data needed depends on the chosen task for the AI.

Algorithms

These are driving forces behind AI systems. They can range from simple models like linear regression to advanced ones such as convolutional neural networks used in image and speech recognition.

Technology Stack

A tech stack refers to the assortment of technologies used in constructing an application. This includes programming languages, frameworks, databases, front-end and back-end tools.

1. Define Objective

This is anything from classifying customer feedback as positive or negative to predicting future sales based on historical data. It's recommended to create a comprehensive project brief.

Outline the objectives and constraints such as budget and timeline. It can be flexible but this basis clarifies and defines development process.

2. Choose AI Approach

AI uses various approaches, each suited for different tasks.

Machine Learning (ML): Algorithms are trained on data to learn patterns and make predictions. Common ML techniques include:

Supervised Learning: Training on labeled data (e.g., images labeled as "cat" or "dog").

Unsupervised Learning: Discovering patterns in unlabeled data (e.g., grouping customers based on their purchase history).

Reinforcement Learning: Training an agent to make decisions in an environment to maximize an undefined reward.

Rule-Based Systems: These rely on predefined rules crafted by "experts". The system applies these rules to make decisions based on specific inputs.

Natural Language Processing (NLP): This enables computers to understand and process human language.

3. Sources of Data

There are several ways to collect data.

Public Datasets: Sources like Kaggle (Google) and the UCI Machine Learning Repository offer free data for various applications.

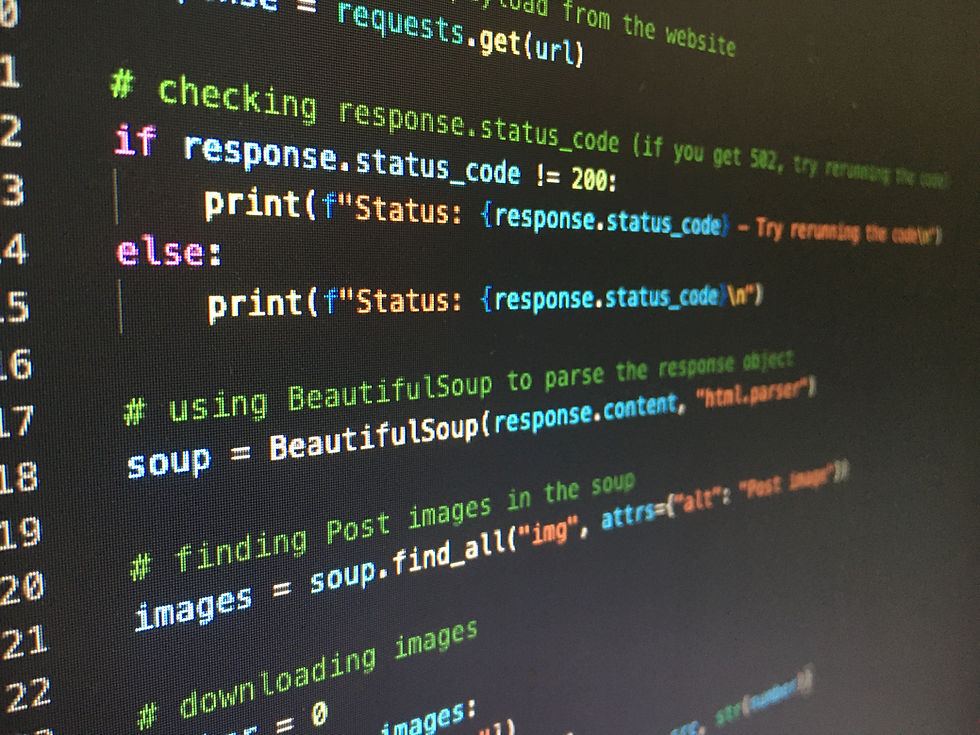

Web Scraping: If public datasets don’t meet your needs, consider web scraping for data extraction from websites. Web scraping tools are easily available online.

APIs: Use APIs from existing platforms to access structured data effortlessly. It can take a little time. For instance, to use Weather.com API, a developer account on the Weather Underground website, an API key, and an API request using proper endpoint and parameters are needed.

After collecting the data, it's necessary to clean and preprocess it. Proper data cleaning involves removing duplicates, fixing missing values, and normalizing datasets. Poor data quality messes up model accuracy.

Split Data: Divide data into training, validation, and testing sets.

Training Set: Used to train the AI model.

Validation Set: Used to fine-tune the model during training and prevent overfitting (where the model performs well on training data but poorly on new data).

Testing Set: Used to evaluate the final performance of the trained model.

The algorithm is fundamental to the success of an AI system. Again, choice depends on the type of problem and nature of the dataset. A few common algorithms and their applications include:

Decision Trees: Ideal for both classification and regression tasks. They are popular for their interpretability.

Support Vector Machines (SVM): Effective in high-dimensional spaces (ie datasets with multiple attributes), they are often used for image classification tasks.

Neural Networks: For complex problems like image recognition, celebrated for near-perfect accuracy.

4. Tech Stack: Tools and Libraries

Familiarity with programming languages like Python is helpful. Python ranks as a top programming language and is widely used in machine learning. R is also good, especially in statistical analysis.

Libraries such as TensorFlow (Google) and PyTorch (competitor of Google) are available. Others include Caffe, Theano, Apache MXNet, Chainer, OpenCV and Dlib. A solid tech stack goes far to increase speed and reduce errors.

5. Build and Train

Model Selection: Choose an appropriate model architecture. For image classification, a convolutional neural network (CNN) is commonly used.

Model Training: Feed the training data to the model and adjust its parameters to minimize the error between its predictions and the actual labels.

Monitor Performance: Use the validation set to monitor the model's performance during training. Adjust hyperparameters (e.g., learning rate, number of layers) to optimize performance.

6. Evaluate and Refine

After training, evaluate the model's performance on the testing set.

Evaluation Metrics: Use key performance indicators (KPIs) like accuracy, F1 score and ROC-AUC to assess model effectiveness. Cross-validation helps confirm the results.

Refinement: If the model's performance is not satisfactory, which is probable at first, try the following:

Gather more data: More data often leads to better performance.

Adjust hyperparameters: Experiment with different hyperparameters to find the optimal configuration.

Try a different model: Consider using a different model architecture.

Feature engineering: Explore creating new features from the existing data.

7. Deploy and Maintain

Deployment: Integrate the model into an application or system where it can be used to make predictions.

Cloud Services: Use platforms such as AWS or Google Cloud for scalable deployment.

On-Premises: For sensitive operations, deploying locally provides enhanced security, albeit requiring more resources.

API Integration: Create an API to let other applications interact with the model, making integration seamless.

Monitoring: Monitor the model's performance over time. Data distributions can change, leading to a decline in performance.

Retraining: Periodically retrain the model with new data to maintain its accuracy and relevance.

READ: Lora Ley Adventures - Germanic Mythology Fiction Series

READ: Reiker For Hire - Victorian Detective Murder Mysteries